Ricing My Backup Strategy: Proxmox, WD Red, and 2.5GbE Replication

Background & Hardware #

My home lab runs on a Lenovo M920q with an NVMe drive for performance, but the weakest point was storage for backups. Until now I was using old 2,5" HDD in a cheap usb enclosure as a storage for backups, but it was slow and was lacking available space.I wanted to “rice it out” using the hardware I already had on hand. Instead of buying a flashy new NAS, I decided to use a spare 4TB WD Red, 2,5gbps network and my windows desktop to create a high-performance 3-2-1 backup system.

Hardware Verification #

I opted for a SATA to USB 3.0 enclosure supporting UASP (USB Attached SCSI Protocol) to ensure I wasn’t bottlenecked by the USB interface. UASP is the “secret sauce” here—it allows for much higher speeds and lower CPU overhead compared to the old Bulk-Only Transport (BOT) protocol.

After plugging it in, I verified the UASP driver was active:

$ lsusb -t | grep Mass

|__ Port 003: Dev 002, If 0, Class=Mass Storage, Driver=uas, 5000M

I also confirmed the disk was recognized correctly:

$ lsblk -o MODEL,NAME /dev/sda

MODEL NAME

WDC WD40EFRX-68N32N0 sda

└─sda1

Setting Up the Disk: Taming the WD Red #

Mechanical NAS drives like the WD Red (EFRX) have aggressive power-saving features. In a Proxmox environment, constant head parking can cause lag and premature hardware failure.

- Disabling Head Parking I used idle3ctl to disable the 8-second head parking timer. This prevents the actuator arm from constantly retracting. Note: This requires a full power cycle (unplug/replug) to apply the firmware change.

$ idle3ctl -d /dev/sda

Idle3 timer disabled

Please power cycle your drive off and on for the new setting to be taken into account. A reboot will not be enough!

- Managing Spindown with hdparm To ensure the drive doesn’t “sleep” during my backup windows, I used hdparm.

File: /etc/hdparm.conf"

/dev/sda {

spindown_time = 0

}

- Optimizing the I/O Scheduler For mechanical HDDs, the BFQ (Budget Fair Queuing) scheduler is superior for handling heavy backup streams. I created a udev rule to apply this automatically.

File: /etc/udev/rules.d/60-hdd-scheduler.rules

ACTION=="add|change", KERNEL=="sda", ATTR{queue/rotational}=="1", ATTR{queue/scheduler}="bfq"

Pulling backups from Proxmox to Windows #

To achieve a true 3-2-1 strategy, I needed a copy off the Proxmox node. I decided to replicate the /mnt/external/dump directory to my Desktop PC’s RAID array.

The Replication Script #

I use rclone on Windows due to ability to connect over ssh and login using my ssh key. Instead of a static config file, I use environment variables to define the SFTP connection on the fly. This makes the script portable and easy to manage.

sync_proxmox.bat

@echo off

SETLOCAL EnableDelayedExpansion

:: --- Configuration ---

SET "REMOTE_HOST=192.168.1.10"

SET "REMOTE_USER=root"

SET "REMOTE_PATH=/mnt/external/dump"

SET "LOCAL_PATH=D:\Backup\proxmox-backup"

:: Dynamic Rclone SFTP Setup via Env Vars

SET "RCLONE_CONFIG_PROX_TYPE=sftp"

SET "RCLONE_CONFIG_PROX_HOST=%REMOTE_HOST%"

SET "RCLONE_CONFIG_PROX_USER=%REMOTE_USER%"

SET "RCLONE_CONFIG_PROX_USE_AGENT=true"

:: Check for SSH Keys in Windows OpenSSH Agent

ssh-add -L >nul 2>&1

if %ERRORLEVEL% NEQ 0 (

echo [!] Error: SSH Agent has no keys. Run ssh-add first.

pause

exit /b

)

echo [!] Starting Sync: %REMOTE_HOST%:%REMOTE_PATH% -> %LOCAL_PATH%

rclone sync PROX:"%REMOTE_PATH%" "%LOCAL_PATH%" ^

--progress ^

--transfers 1 ^

--buffer-size 1024M ^

--multi-thread-streams 1 ^

--multi-thread-cutoff 999G ^

--sftp-concurrency 16 ^

--sftp-chunk-size 128k ^

--use-mmap ^

--links ^

--sftp-disable-hashcheck ^

--contimeout 2m ^

--timeout 10m ^

--stats 9999h

pause

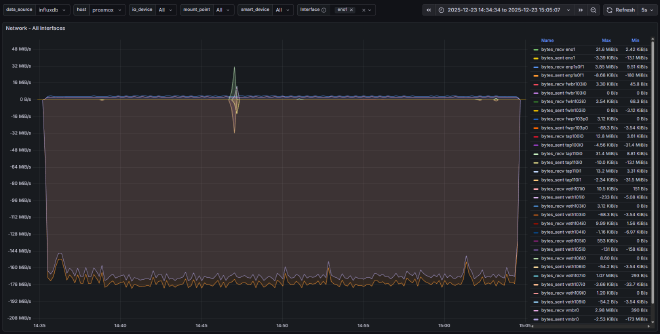

Performance #

The results? Sustained speeds of ~170 MiB/s over network.

As shown in my Grafana metrics, we are effectively saturating the physical sequential limits of the WD Red.