Modern Web Observability in Home Lab

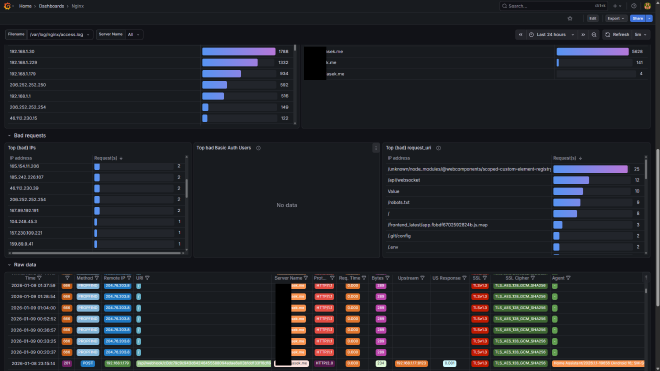

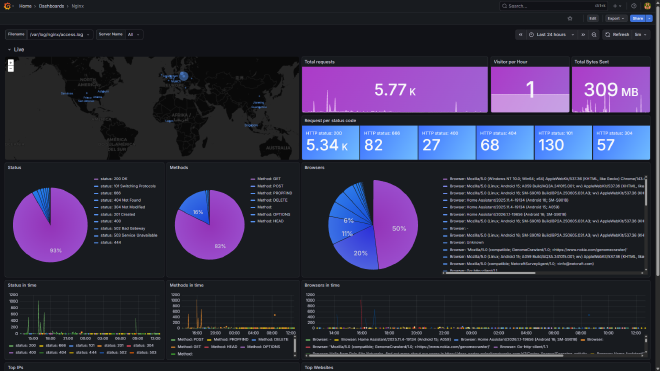

Monitoring a self-hosted stack in home lab isn’t just about uptimes, it’s about knowing exactly who is knocking on your digital door. Also, I’m a datanerd and I like to collect data to analyze it later. After refining my setup, I’ve moved from basic text logs to a fully enriched, geographical observability dashboard using the PLG stack (Promtail, Loki, Grafana).

I’ll show you how to make a Nginx gateway that logs JSON with GeoIP data and visualizes it on a Grafana.

The Architecture #

Nginx + PLG Stack

- Nginx identifies the visitor’s location using MaxMind databases and logs it as JSON

- Promtail ships these JSON objects to Loki

- Loki 3.0 handles the storage and retention

- Grafana parses the coordinates and plots them in real-time

Step 1: Nginx JSON Logging & GeoIP #

Standard Nginx logs are hard to parse. Switching to JSON makes them machine-readable. By integrating the libnginx-mod-http-geoip2 module and MaxMind databases, we can inject location data directly into every log line.

Nginx configuration #

First, we need the libnginx-mod-http-geoip2 module.

In my /etc/nginx/nginx.conf, I defined a specific JSON format:

1geoip2 /etc/nginx/geo/GeoLite2-Country.mmdb {

2 auto_reload 5m;

3 $geoip2_data_country_code country iso_code;

4}

5

6geoip2 /etc/nginx/geo/GeoLite2-City.mmdb {

7 auto_reload 5m;

8 $geoip2_data_city_name city names en;

9 $geoip2_data_latitude location latitude;

10 $geoip2_data_longitude location longitude;

11 $geoip2_data_country_code country iso_code;

12}

13

14log_format json escape=json '{'

15 '"time_local": "$time_local", '

16 '"remote_addr": "$remote_addr", '

17 '"request_uri": "$request_uri", '

18 '"status": "$status", '

19 '"server_name": "$server_name", '

20 '"request_time": "$request_time", '

21 '"request_method": "$request_method", '

22 '"bytes_sent": "$bytes_sent", '

23 '"http_host": "$http_host", '

24 '"http_x_forwarded_for": "$http_x_forwarded_for", '

25 '"http_cookie": "$http_cookie", '

26 '"server_protocol": "$server_protocol", '

27 '"upstream_addr": "$upstream_addr", '

28 '"upstream_response_time": "$upstream_response_time", '

29 '"ssl_protocol": "$ssl_protocol", '

30 '"ssl_cipher": "$ssl_cipher", '

31 '"http_user_agent": "$http_user_agent", '

32 '"remote_user": "$remote_user", '

33 '"geoip_country_code": "$geoip2_data_country_code", '

34 '"geoip_city_name": "$geoip2_data_city_name", '

35 '"geoip_lat": "$geoip2_data_latitude", '

36 '"geoip_lon": "$geoip2_data_longitude"'

37'}';

38

39access_log /var/log/nginx/access.log json;

Step 2: Loki #

Loki is our log storage. Running on a small server (like a 5GB LXC container) means you can’t keep logs forever. Loki 3.x introduced a strict but efficient Compactor to manage disk space.

Here is my optimized config.yml for 14day retention:

1auth_enabled: false # setup auth on nginx if needed

2

3server:

4 http_listen_port: 3100

5 grpc_listen_port: 9096

6

7common:

8 instance_addr: 127.0.0.1

9 path_prefix: /var/lib/loki

10 storage:

11 filesystem:

12 chunks_directory: /var/lib/loki/chunks

13 rules_directory: /var/lib/loki/rules

14 replication_factor: 1

15 ring:

16 kvstore:

17 store: inmemory

18

19compactor:

20 working_directory: /var/lib/loki/compactor

21 compaction_interval: 10m

22 retention_enabled: true

23 retention_delete_delay: 2h

24 retention_delete_worker_count: 50

25 delete_request_store: filesystem

26

27limits_config:

28 retention_period: 14d

29 max_entries_limit_per_query: 5000

30 ingestion_rate_mb: 4

31 ingestion_burst_size_mb: 8

32

33table_manager:

34 retention_deletes_enabled: true

35 retention_period: 14d

36

37query_range:

38 results_cache:

39 cache:

40 embedded_cache:

41 enabled: true

42 max_size_mb: 100

43

44schema_config:

45 configs:

46 - from: 2020-10-24

47 store: tsdb

48 object_store: filesystem

49 schema: v13

50 index:

51 prefix: index_

52 period: 24h

53

54ruler:

55 alertmanager_url: http://localhost:9093

56

57frontend:

58 encoding: protobuf

Step 3: Promtail #

Promtail watches the /var/log/nginx/access.log file. Its job is to detect the JSON structure and passes it to Loki.

1server:

2 http_listen_port: 9080

3 grpc_listen_port: 0

4

5positions:

6 filename: /var/lib/promtail/positions.yaml

7

8clients:

9 - url: http://grafana.local:3100/loki/api/v1/push

10

11scrape_configs:

12- job_name: nginx_json

13 static_configs:

14 - targets:

15 - localhost

16 labels:

17 job: nginx

18 host: nginx.local

19 __path__: /var/log/nginx/access.log

20

21 pipeline_stages:

22 - json:

23 expressions:

24 time_local: time_local

25 status: status

26 request_method: request_method

27 server_name: server_name

28

29 - timestamp:

30 source: time_local

31 format: "02/Jan/2006:15:04:05 -0700"

32

33 - labels:

34 status:

35 request_method:

36 server_name:

Step 4: Visualize with Grafana #

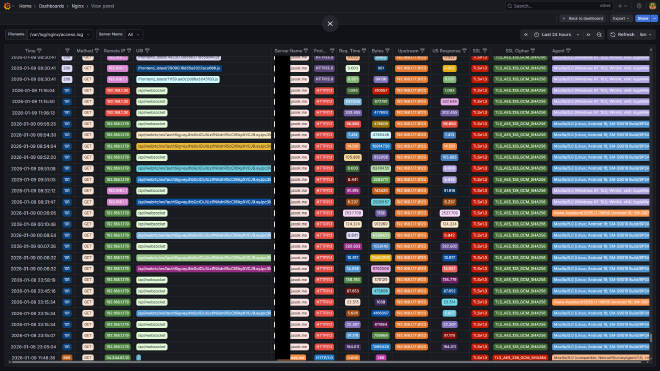

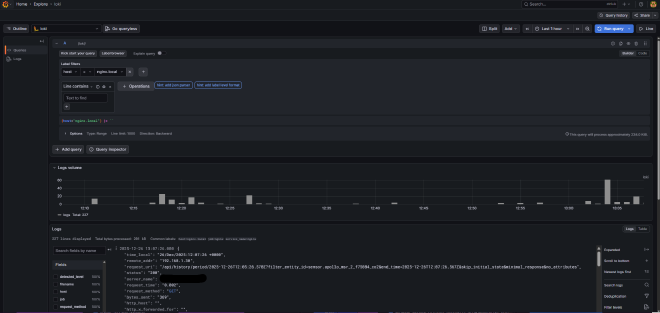

If you have a Grafana instance, you can add a new data source pointing to Loki and create a dashboard. It’s good to explore the data in explore tab first.

You can download my Grafana Dashboard JSON here. It’s a variation of https://grafana.com/grafana/dashboards/12559-loki-nginx-service-mesh-json-version/.

What i find great in this dashboard is table data explorer, which makes browsing through the data easy due to colored values and ability to filter data live.